Everything you need to know about the latest version of Claude

Anthropic's updated Sonnet model can click, type and perceive your screen. It can even tweak code directly in your editor.

Anthropic has dropped a major update for Claude. No longer simply passive software that answers your questions and writes your code, its flagship Sonnet model can now use a computer.

It can click. It can take screenshots. It can type. It can perceive a screen.

It can fill out a form and send it. It can search Google, make calendar events and plan routes. It can write code right in your desktop editor.

The skills are basic, for now, and Anthropic warns Claude is still error-prone. But it's the first time a company has released a public AI model with these kinds of capabilities.

Computing with Claude

Still in beta, Claude 3.5 Sonnet now has basic computer skills it can use to interact with real-world devices.

Developers can access these capabilities via the Anthropic API, Amazon Bedrock, and Google Cloud’s Vertex AI.

You can prompt Claude to perform tasks on your computer using a basic chat interface.

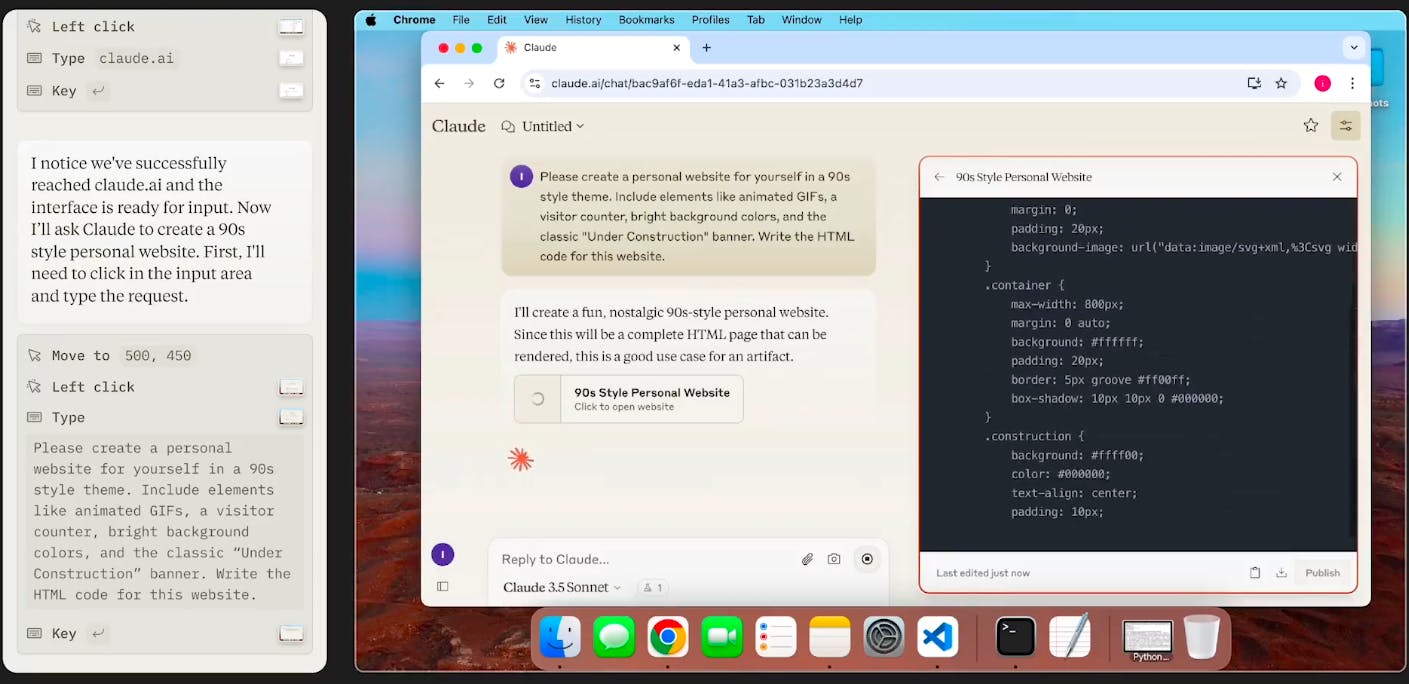

Tasks can include anything from loading programs to typing. You can even use desktop Claude to interact with browser-based Claude, as developer relations lead Alex Albert demonstrates in a video.

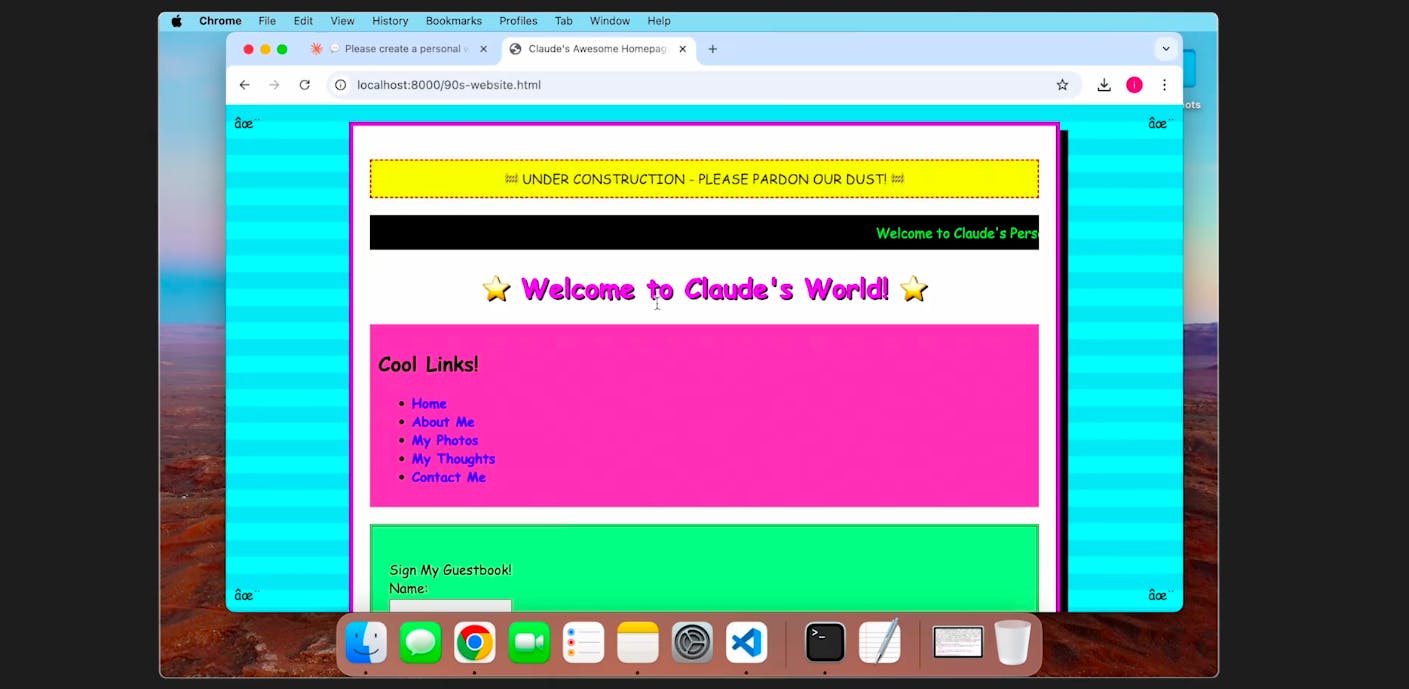

He asks desktop Claude to load a browser, find Claude.ai, and prompt it to make a simple website, as you can see in the image below.

Once the site is ready, desktop Claude saves the code to Albert's laptop and interacts with it directly in VSCode.

Spotting an error once it's live on a local server, Albert asks Claude to look at the page (yes — look), find the issue and fix it.

The same skills can be used for all kinds of simple tasks.

In another demonstration, researcher Pujaa Rajam asks Claude to work out the logistics for a sunrise trip to San Francisco's Golden Gate Bridge. It maps out the trip, searches the web for the best time to leave, and even creates a calendar event.

Anthropic says Claude can "automate repetitive tasks, conduct testing and QA, and perform open-ended research."

But the company warned on X that these "groundbreaking" skills are "still experimental—and at times, error-prone."

Claude mistakenly stopped recording during the production of one of Anthropic's demos, losing the whole video.

"Some actions that people perform effortlessly—scrolling, dragging, zooming—currently present challenges. So we encourage exploration with low-risk tasks. We expect this to rapidly improve in the coming months."

Performance boosts for Sonnet and Haiku

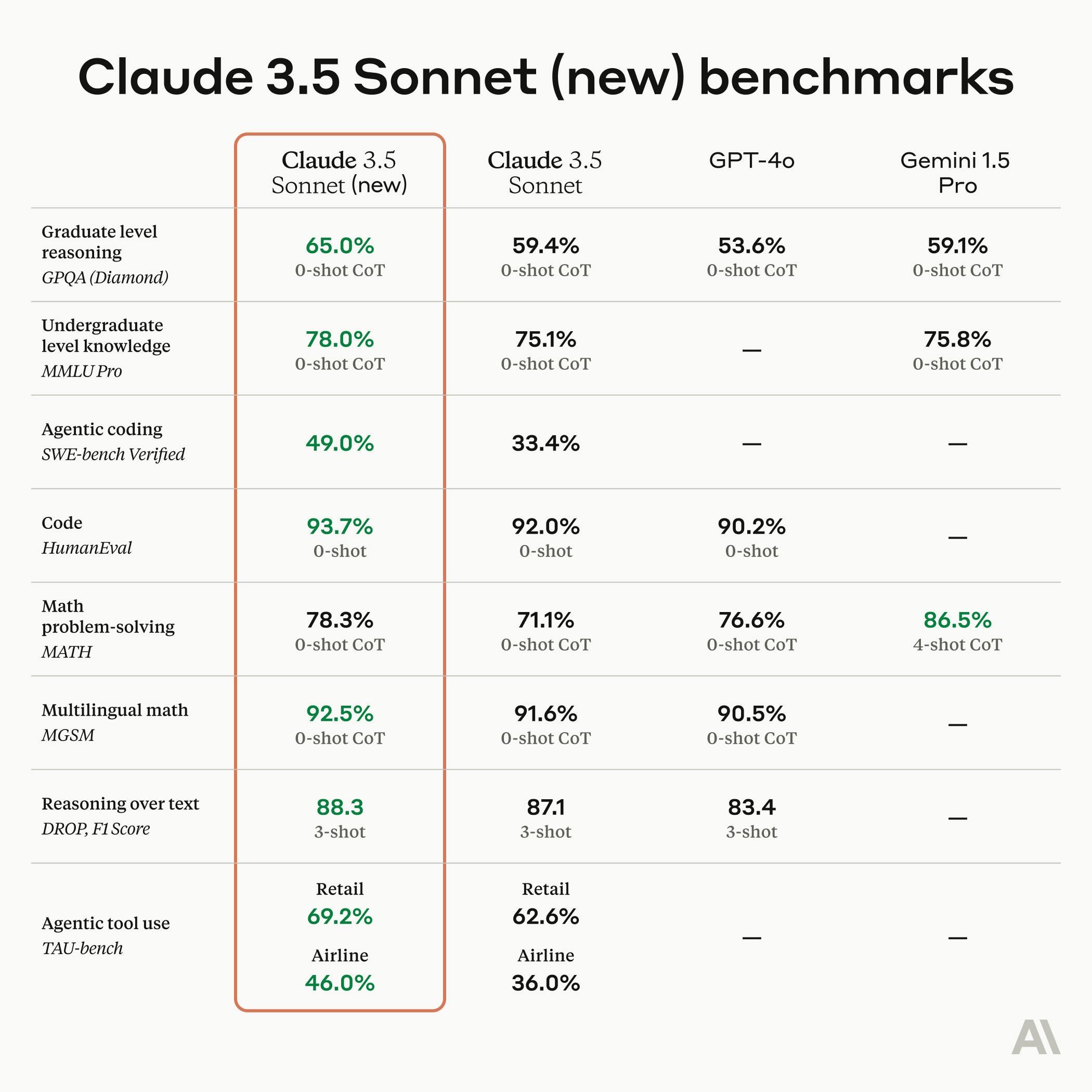

Beyond its new computer skills, Claude 3.5 Sonnet offers improved performance on previous versions.

It's better on most standard measures for reasoning, knowledge and coding than both GPT-4o and Google's Gemini 1.5 Pro.

But it does lag significantly behind the latter on math problem-solving as measured by the MATH benchmark.

Anthropic is particularly excited about Sonnet's coding skills, which already beat OpenAI's GPT family on previous versions.

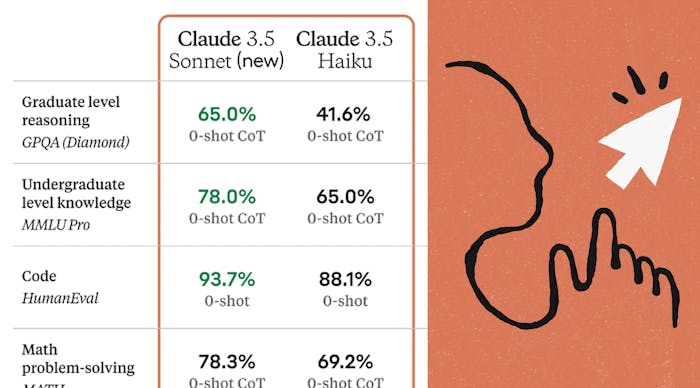

Here's the full benchmarking against OpenAI's GPT family:

The firm has also juiced up its lighter Haiku model, which should be released towards the end of October. It will start as a text-only model, with image input planned for the future.

Claude 3.5 Haiku will offer much-improved reasoning and knowledge on its predecessor Claude 3 Haiku.

It doesn't perform so broadly well against its rivals as does Sonnet, but it does boast better coding ability.

Tried many AI tools and I keep coming back to Claude

It'd be better if we saw o-1 and llama 3.2 in the comparison. Only then we'd know if it's powerful enough

Interesting they didn't include o-1 in the comparison. Almost feels like a marketing play since it is claimed to perform better in many of these categories. Wonder what everyone else thinks?

It's worth to mention that Anthropic is not the first one to attempt UI navigation. Adept (2022) raised $350M just to do UI navigation but they haven't publicly shipped anything yet. A few others have also tried: MultiOn, Induced, Rabbit, Hypewrite's PA, Autotab, etc.

All have pivoted to workflow automation because general purpose UI navigation is flaky AF, including Anthropic's.

A couple of research breakthroughs are needed to get this right. One is in the field of reliable agentic workflows and VLMs capable of segmenting and navigating UIs.

loving the pace these folks are moving at. look forward to a fun wave of devs working on this!

cool man

I really want to move to Claude from ChatGPT but the only reason I’m in ChatGPT is because it offers dalle with it. If Claude offers image generation in the paid option then I’ll switch the next second

That would be amazing!

I'm stuck with ChatGPT for the same reason

so am i T-T