The largest ever copyright-free AI training dataset just

dropped

The open source dataset contains two trillion tokens worth of completely permissable content.

AI company Pleias has published the massive copyright-free dataset it uses to pretrain its language models.

Sourced from financial and legal documents, open-source code, academic journals, and public domain publications, Pleias says its the largest entirely permissable multilingual dataset available.

At just over two trillion tokens, "Common Corpus" is substantial. It holds far more tokens than OpenAI used to train GPT-3 (approximately 300 billion), but far fewer than the firm used to train GPT-4 (approximately 13 trillion).

For context, a token is a tiny unit of language a little smaller than most words.

Everything in the Common Corpus dataset is documented and traceable, in addition to having a permissable license. Should you wish, you can find the source of each and every one of its tokens.

This means it may be better protected from tightening regulations around data used to train large language models.

The dataset is designed for training generalist models, which can be used them for all kinds of queries, as well as tasks like writing and coding.

Developers can "fine-tune" generalist models with more specific data to make them experts in tighter areas of knowledge.

Pleias has trained its own open models on its copyright-free dataset. It also owns several AI assistants designed for companies that use sensitive data or work in regulated industries.

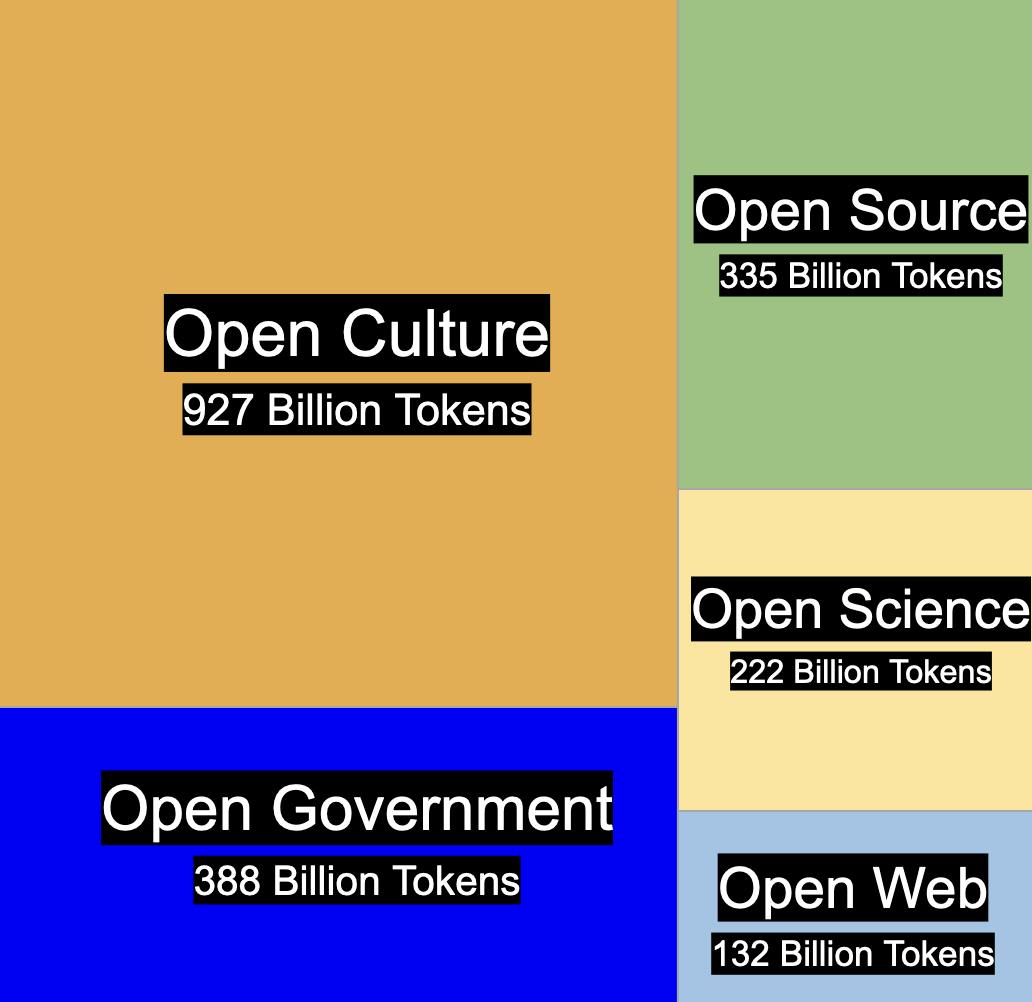

Common Corpus is made up of five categories of data: Open Culture, Open Government, Open Source, Open Science and Open Web, as you can see from the visualization above.

Open Culture is made up of public domain books, newspapers, and Wikisource content. Digitization errors have been corrected.

Open Government data includes financial and legal documents designed to provied "enterprise-grade training data from regulatory bodies and administrative sources."

Open Source is made up of high quality open source code from Github.

Open Science features scientific articles from open science repositories including. It's been processed visually so important elements like tables and charts are preserved.

OpenWeb includes data from websites like Wikipedia and YouTube Commons.

Although it's mostly made up of English-language text, the dataset also contains a substantial amount of information in other languages — particularly French and German.

Pleias says Common Corpus shows it's possible to train large language models without relying on copyrighted material, contrary to the claims of some major AI firms.

OpenAI previously told U.K. lawmakers it was "impossible" to create LLMs like GPT without this protected content, "because copyright today covers virtually every sort of human expression — including blogposts, photographs, forum posts, scraps of software code, and government documents."

The company has already faced legal challenge over the data used to train its GPT LLM family. The New York Times sued OpenAI and Microsoft for copyright infringement last year, alleging that millions of its articles had been used to train LLMs.

Models trained on Pleias' data shouldn't be vulnerable to these kinds of copyright claims. They should also be safe from wider AI transparency legislation, like the European Union's new EU AI Act, which requires companies to publish summaries of model-training data.

"Many have claimed that training large language models requires copyrighted data, making truly open AI development impossible," Pleias co-founders Pierre Carl-Langlais, Anastasia Stasenko and researcher Catherine Arnett wrote in a blog post for AI community HuggingFace.

"We have taken extensive steps to ensure that the dataset is high-quality and is curated to train powerful models," they added. "Through this release, we are demonstrating that there doesn’t have to be such a [heavy] trade-off between openness and performance."

Common Corpus is available for free via HuggingFace. It's part of the AI Alliance Open Trusted Data Initiative, which aims to combat the "murky provenance" of many datasets.

"Wow, this is a game-changer! It’s refreshing to see a company like Pleias take such a transparent and ethical approach to building a dataset. The fact that everything in Common Corpus is traceable and copyright-free really sets a new standard for AI training. It’s like a win-win: powerful models without the legal and ethical gray areas.

Also, the diversity in the dataset categories—especially Open Science and Open Source—is impressive. Preserving elements like tables and charts in scientific articles? That’s next-level attention to detail.

It’s exciting to see the AI space moving towards openness and trust. Definitely keeping an eye on what Pleias and the AI Alliance Open Trusted Data Initiative do next!"