Improving accessibility using vision

models

One of my projects I worked on recently was migrating a massive set of math courses from one platform to another.

Math is hard enough, why make it harder for students?

One of my projects I worked on recently was migrating a massive set of math courses from one platform to another. Along the way we realized some of our math courses had not been updated in quite some time, and some schools were still leveraging these courses to teach.

Images for equations are bad m’kay

It was immediately apparent was the use of images to represent equations like this:

This is not great… the font is a bit on the smaller side and the font itself is not very legible, in my non-font expert opinion. Making matters worse, there is no alt-text provided that can explain the equation. I asked the question: Could an LLM help here?

Getting answers

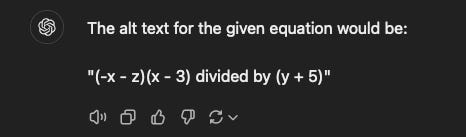

Putting the equation into ChatGPT yielded a great answer

“Provide thje alt text for this equation”

I wanted to ensure this wasn’t just a fluke, especially since I had thousands of images to process. So, I took a few hundred of them, annotated each with the correct LaTeX answer, and compared the results using GPT-4o and Gemini.

For context, I used a directory of 300 images and a SQLite database containing the LaTeX answers. I then ran a Python script that processed each image through three models: GPT-4o, Gemini 1.5 Pro, and Gemini 1.5 Flash.

The results

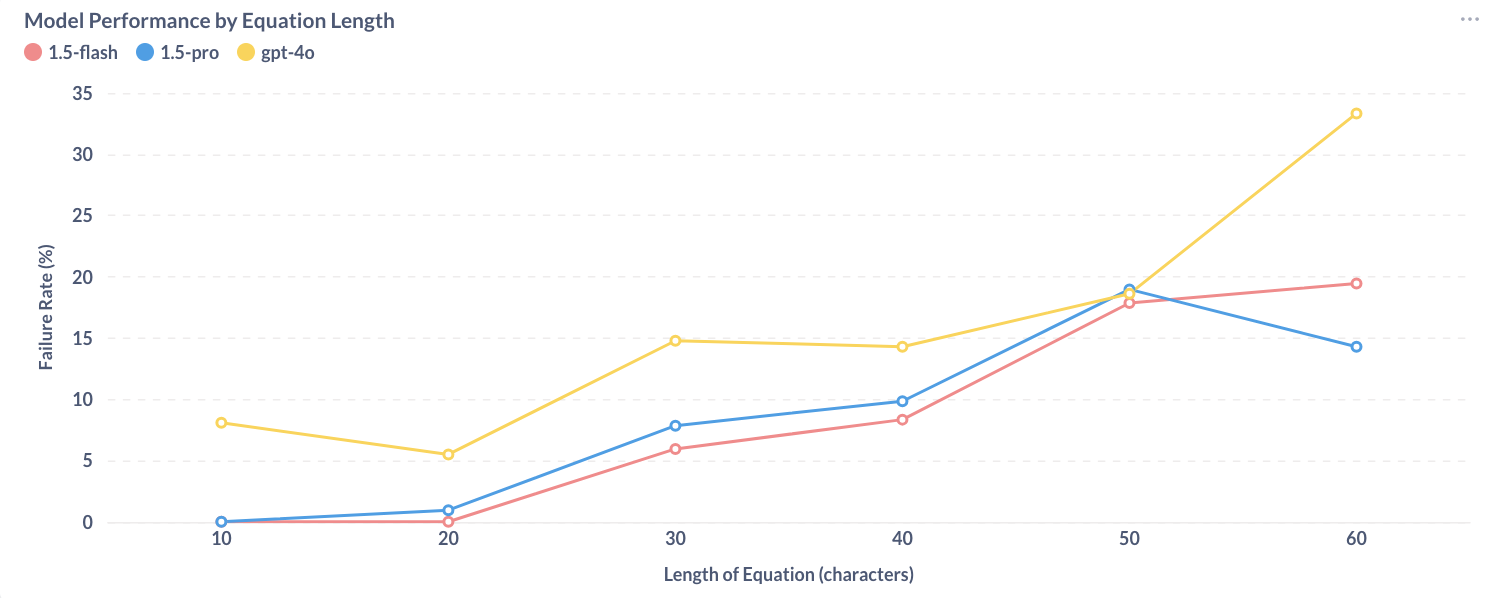

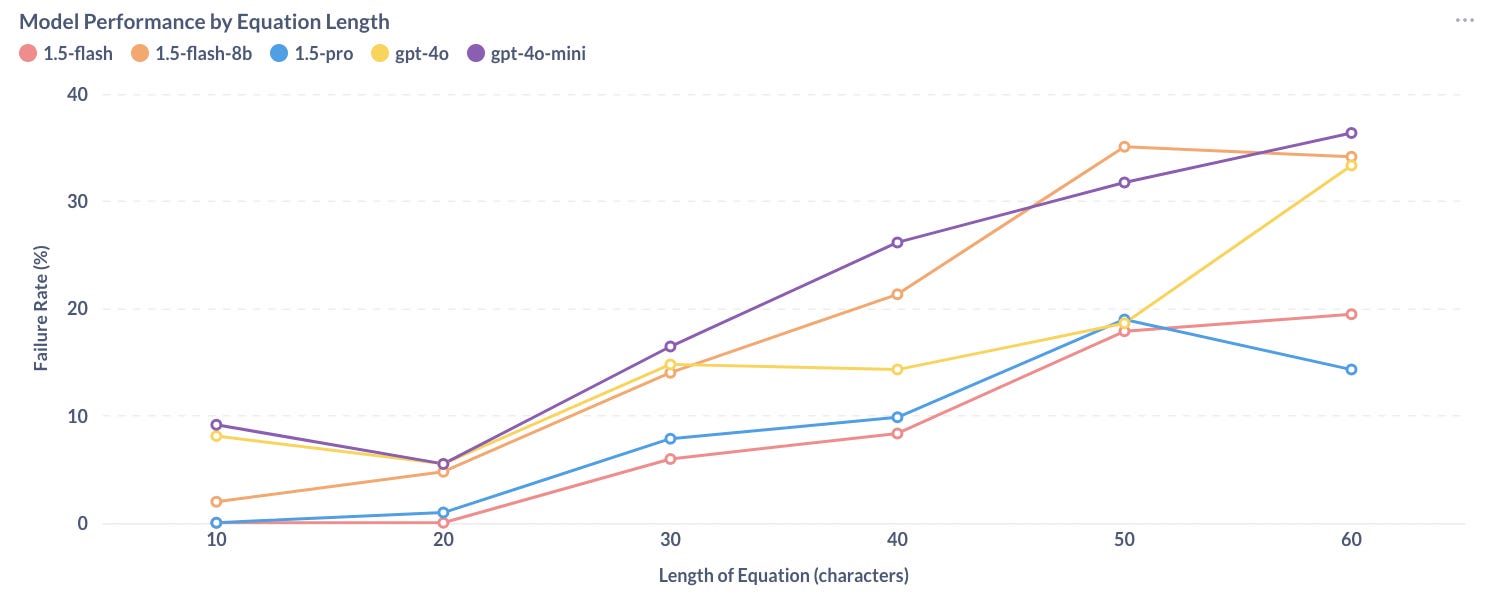

This is a graph of error rate as compared to the length of the equation, due to the data set there are much more smaller equations as compared to large ones. I’ve bucketed them into lengths that are multiples of 10, so length one is anything with up to 10 characters, bucket two is 11-20 characters long and so on. I knew the error rate would go up with length, but it is interesting to see all three models struggle around the 30 character mark.

The most interesting thing to me is the performance of gemini-1.5-flash, which does better on everything but the largest images, but costs a fraction of the price??? In fact it doesn’t even have error for our most common equations. I ran this three times and it was the same every time.

Compare and contrast

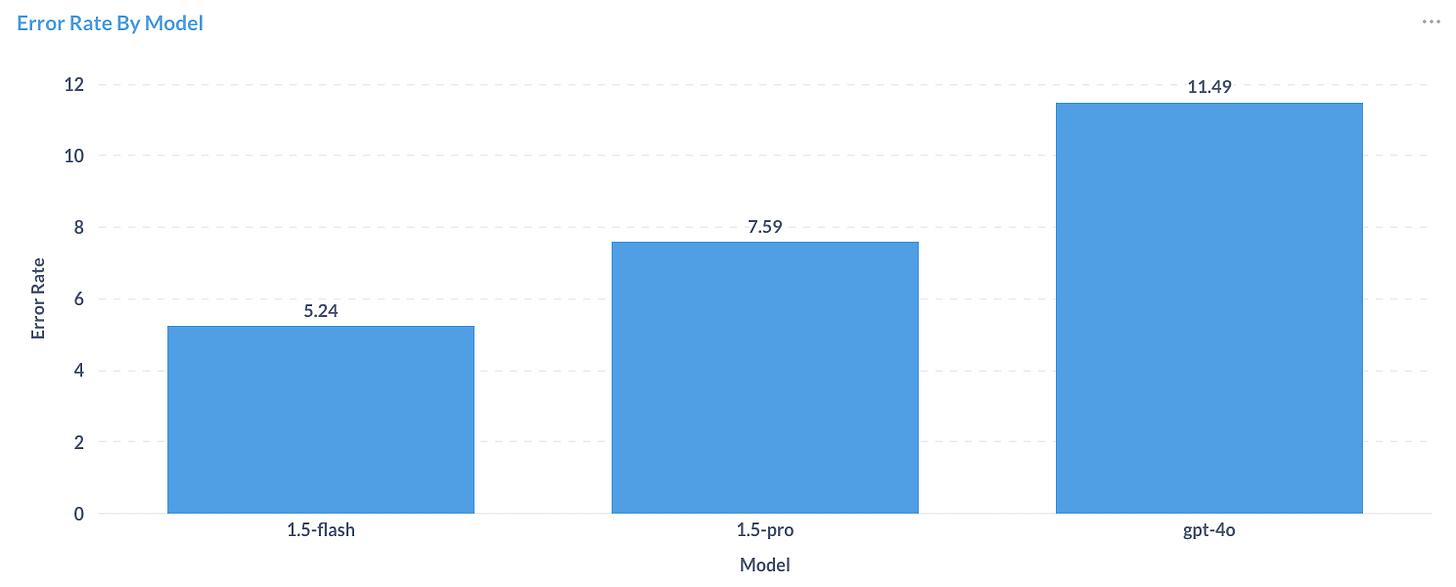

Error Percent by Model

Now that we have this data, we can pivot to look at where 1.5-flash got the answer correct, but gpt-4o got it wrong.

Overwhelmingly there are two main errors:

Where gpt-4o confused a minus symbol with an equal sign, this happens a lot where the character “y” is found, it seems to bias towards y=mx+b

Where gpt-4o just gets the characters wrong e.g. mistaking a “Z” for a “2”

Are vision models usable for equation images?

Given this result, we used gemini-1.5-flash to rebuild our math equations into LaTeX which our Learning Management System (LMS) already natively supported. Since we knew anything longer than 20 characters would tend to have more issues, we flagged those for manual review. Only 27% of questions had equations longer than this limit, turning a relatively huge overhaul into a fraction of the work. Additionally, we avoided having to move customers onto new course materials, which is costly and often requires many layers of approval.

Extras

Here is the full data set, including gpt-4o-mini the newly released gemini-flash-1.5-8b (great naming btw Google). I left these out as it just clutters the graph, their performance is terrible for this task.

This article was originally published here.

I recently read an interesting case study about migrating math courses and automating equation conversions with LLM models like GPT-4 and Gemini. It reminded me of similar challenges we've faced in web development projects. Accurate data processing and efficient automation can really streamline content migration. If anyone is working on platform migrations, you might want to check out how can help in building robust, scalable systems that handle complex workflows smoothly.