Alibaba drops reasoning model to rival OpenAI's

o1

Two Chinese companies have released "reasoning" models in just over a week.

.jpg?w=700)

Chinese tech giant Alibaba has released a large language model with capabilities that rival OpenAI's o1 "reasoning" family.

Developers can access QwQ-32B-Preview via AI Community Hugging Face. The "open" model has an Apache 2.0 license and can be used commercially.

Created by the Alibaba's Qwen team, it's is the second "reasoning" model released in just over a week.

Fellow Chinese company DeepSeek launched a preview version of its R1-Lite reasoning model eight days before Alibaba posted QwQ-32B-Preview.

These models are designed to consider problems step-by-step and are given a longer time process tasks. This gives the models a chance to correct themselves during response generation.

Benchmarking already shows reasoning models performs better on scientific, math and coding problems.

Alibaba vs. OpenAI vs. DeepSeek

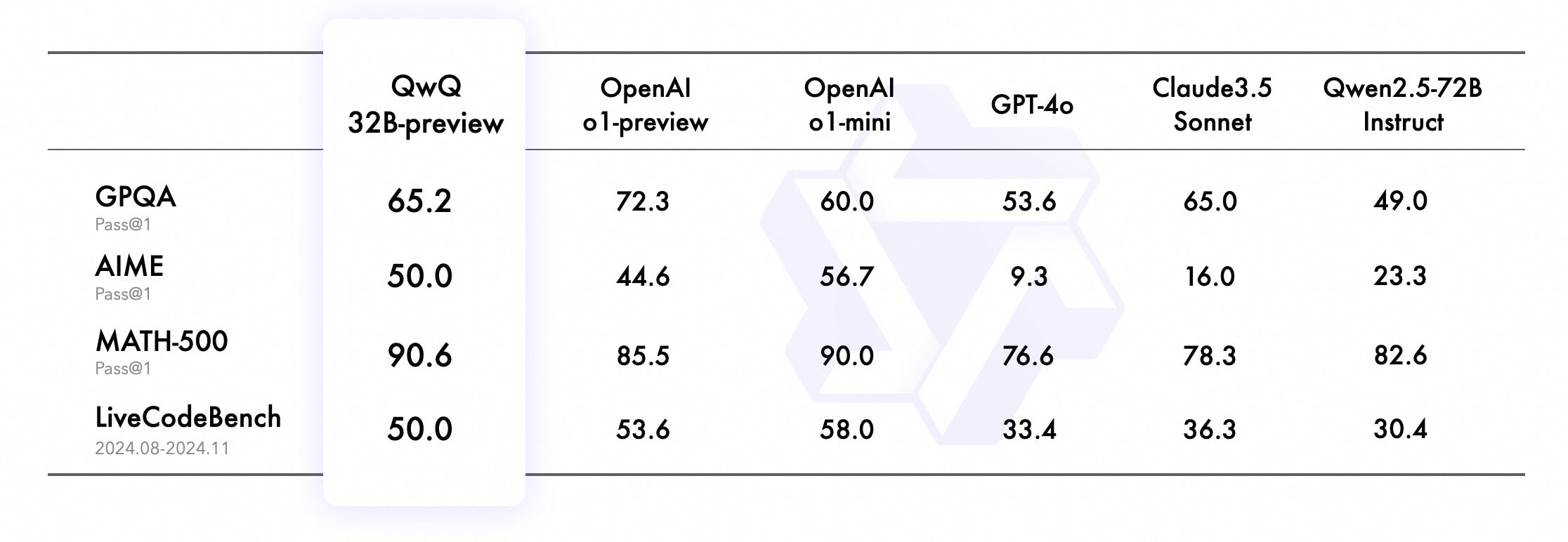

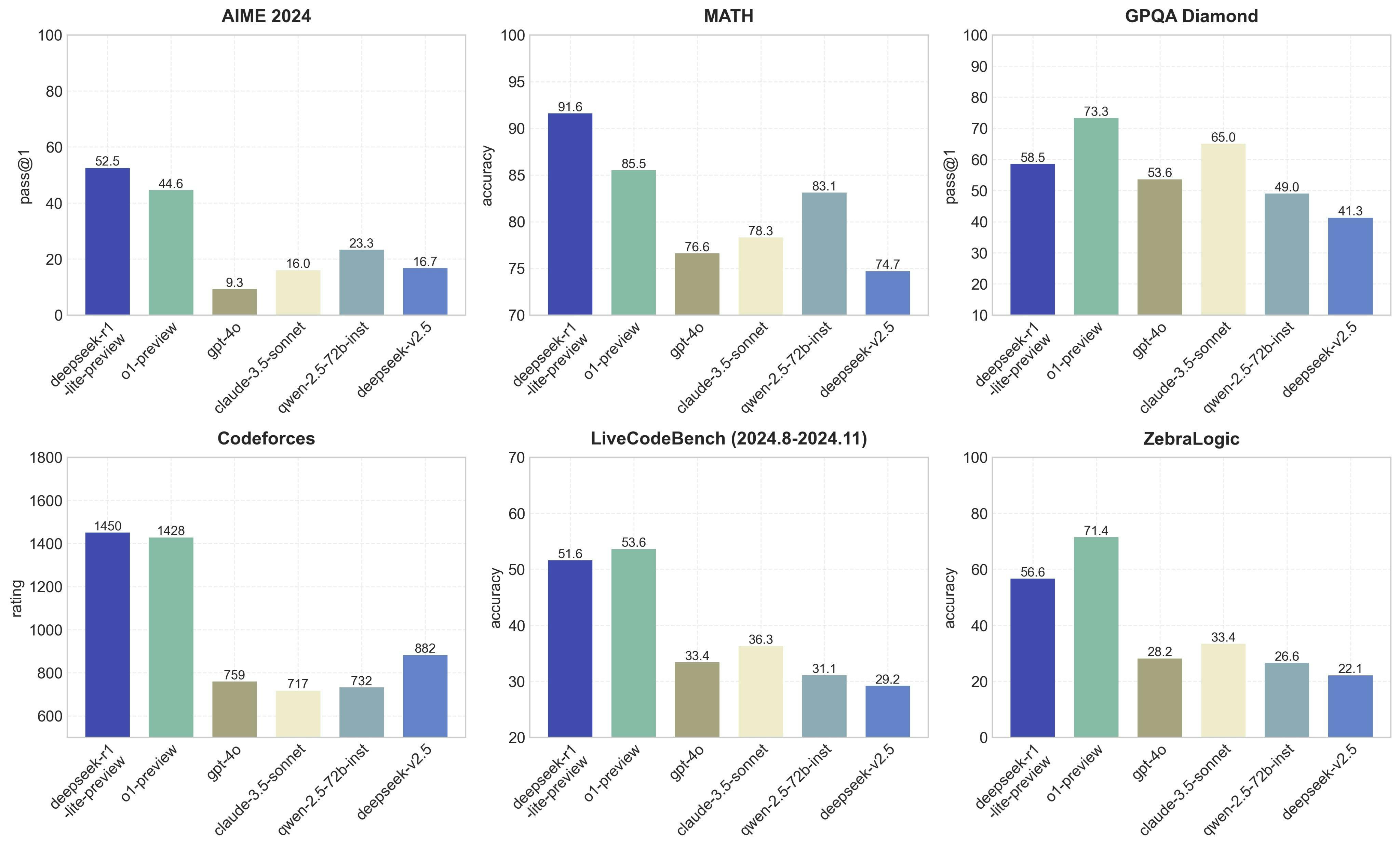

Alibaba and DeepSeek shared benchmarking results alongside their new models.

They both appear to outperform non-reasoning models on math problem solving, scientific reasoning and coding. They also perform better than OpenAI's o1 family on certain measures.

Market leader OpenAI has two reasoning models on offer: o1-preview and the faster o1-mini. They've been available through ChatGPT for several weeks, with API access expanded to all paid users on November 18.

Alibaba's QwQ-32B-Preview beats out o1-preview on math, but lags behind it on scientific problem-solving and coding.

It has a similar math performance to o1-mini, but isn't quite as good at coding.

"These results underscore QwQ’s significant advancement in analytical and problem-solving capabilities, particularly in technical domains requiring deep reasoning," its creators wrote in a blog announcing the model.

DeepSeek's R1-Lite-preview also offers superior math performance to o1-preview. But it also performs worse on coding.

Limited by politics

The models are still experimental and prone to making mistakes. QwQ-32B-Preview's developers warn it can mix up languages and get trapped in reasoning loops.

Like all LLMs released in China, both QwQ-32B-Preview and R1-Lite-Preview will have to meet strict content guidelines set by the government. They must be seen to endorse "socialist values."

QwQ-32B-Preview has been trained not to answer questions that could be considered political. Although, as TechCrunch found, it does answer

What counts as a political question can be confusing for the model, which appears to occasionally give anti-politics responses to clearly technical questions.

Early user Orimo W says the model responded to a question about USB 3 signal frequency with:

"I apologize, but I'm not able to answer questions involving politics. My primary function is to provide information and assistance in non-political areas. If you have any other inquiries, please feel free to ask."

The math problem

This new breed of model has wider limitations than politics. The process of "reasoning" via "chains of thought" means their answers come far slower compared to non-reasoning models — even for questions that aren't all that complicated.

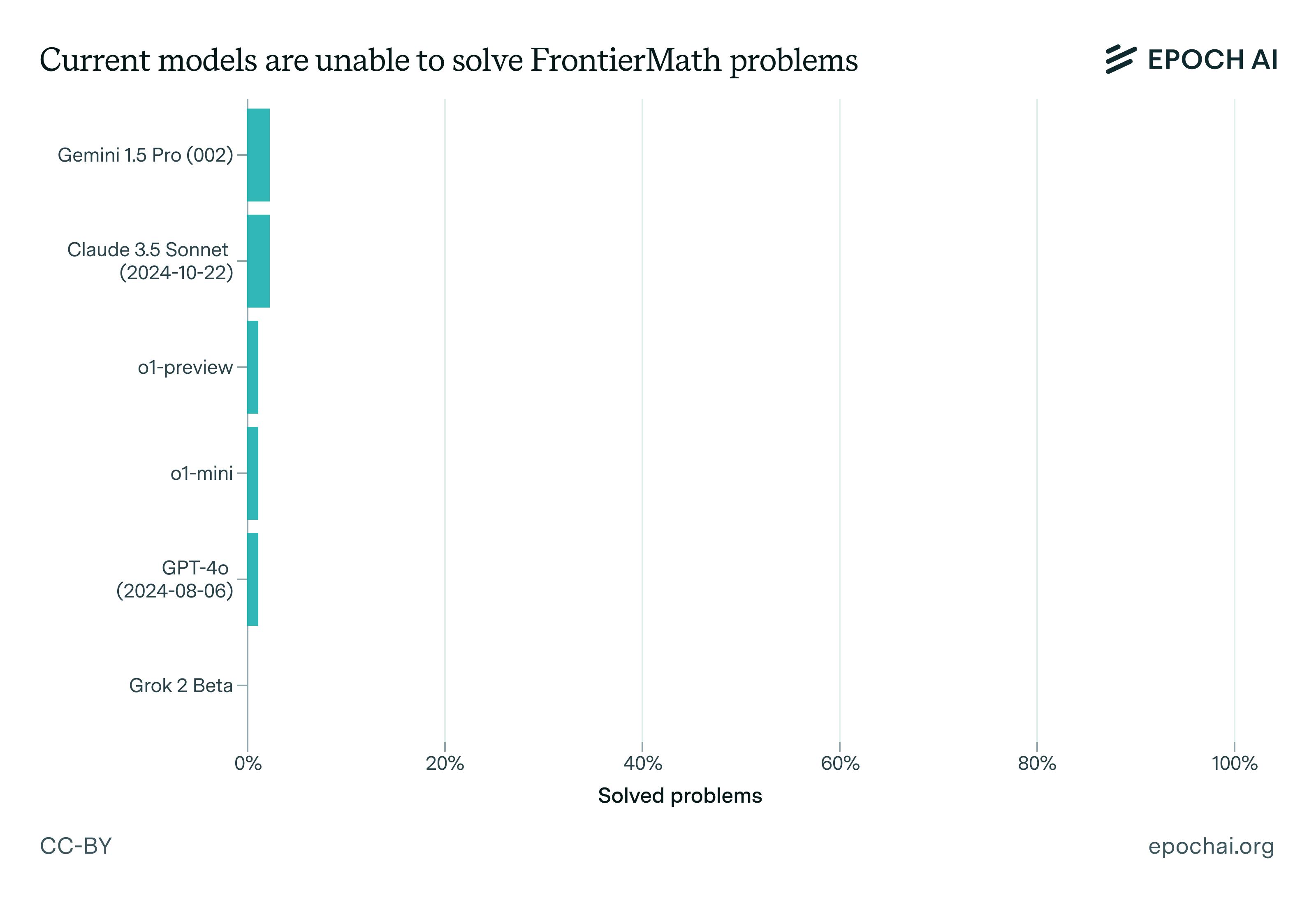

They still struggle with very complex math, despite the scores shown above, as a new benchmark called FrontierMath shows.

FrontierMath gives LLMs a crop of fresh, "guess-resistant" math problems they're unlikely to have encountered during training.

Although results for the new Chinese models have not been published, OpenAI's reasoning models yield pretty pitiful FrontierMath results.

Nonetheless, experts say most humans would perform even worse. "The first thing to understand about FrontierMath is that it's genuinely extremely hard," AI researcher Matthew Barnett wrote on X. "Almost everyone on Earth would score approximately 0%, even if they're given a full day to solve each problem."

I always enjoy reading your posts—they’re full of practical tips and valuable insights for web developers like myself! Your attention to detail makes complex topics feel accessible. Recently, I started using EchoAPI for API testing, and it’s made my workflow so much more efficient.